R is often described as a statistical programming environment, because - while it does get used for an incredible variety of purposes - it began its life as a tool for helping statisticians analyse data. As such, it has an unrivalled collection of statistical packages built into it, and I’ll talk a little about some of them later. However, before starting to talk about statistics, it’s very useful to talk about probability distributions and how they are usually implemented in R.

An useful place to start is by considering the difference between probability and statistics. Probability theory concerns “the doctrine of chances”. It’s a branch of mathematics that tells you how often different kinds of events will happen, given some assumptions about the world. Some examples:

- What are the chances of a fair coin coming up heads 10 times in a row?

- If I roll two six sided dice, how likely is it that I’ll roll two sixes?

- How likely is it that five cards drawn from a perfectly shuffled deck will all be hearts?

- What are the chances that I’ll win the lottery?

The critical point is that probabilistic questions start with a known model of the world, and we use that model to do some calculations.

What about statistics? Statistical questions work the other way around. In statistics, we do not know the truth about the world. All we have is the data, and it is from the data that we want to learn the truth about the world. Statistical questions tend to look more like these:

- If I flip a coin 10 times and gets 10 heads, is someone playing a trick on me?

- If five cards off the top of the deck are all hearts, how likely is it that the deck was shuffled?

- If the lottery commissioner’s spouse wins the lottery, how likely is it that the lottery was rigged?

This time around, the only thing we have are data. What I know is that I saw my friend flip the coin 10 times and it came up heads every time. And what I want to infer is whether or not I should conclude that what I just saw was actually a fair coin being flipped 10 times in a row, or whether I should suspect that my friend is playing a trick on me. To help me solve this problem I might construct two probabilistic models, one assuming this is a fair coin and the other assuming that the data are a trick, and do some comparison of the two. Viewed this way, the statistical inference problem is to figure out which of these probability models is right. Clearly, the statistical question isn’t the same as the probability question, but they’re connected to one another.

19.1 Two views of probability

Let’s start with a simple question that doesn’t have a simple answer: what is “probability”? It might seem surprising, but while statisticians and mathematicians (mostly) agree on what the rules of probability are, there’s much less of a consensus on what the word really means. In the statistic literature there are (at least) two qualitatively different ideas about how to define the term:

- The frequentist view defines probability as long-run frequency. Suppose we were to try flipping a fair coin, over and over again, and divide the number of heads \(n_h\) by the total number of coin flips \(n\), yielding \(p_h = n_h/n\) as the observed proportion. The frequentists argue that the only way to meaningfully define the idea of probability is in terms of what happens to this empirically observed proportion a the sample size becomes arbitrarily large (i.e., \(n \rightarrow \infty\)). In the long run, the proportion of heads will eventually converge to 50%. There are some subtle technicalities that the mathematicians care about, but qualitatively speaking, that’s how the frequentists define probability. Probability is a “thing in the world”

- The Bayesian view is often called the subjectivist view. It used to be a minority view among statisticians, but it’s been steadily gaining traction for the last several decades - it’s common enough that it’s no longer an unusual approach to statistics. The most common way of thinking about subjective probability is to define the probability of an event as the degree of belief that a rational agent assigns to that truth of that event. From that perspective, probabilities don’t exist in the world, but rather in the thoughts and assumptions of people and other intelligent beings. In order for this approach to work, we need some way of operationalising “degree of belief”. One way that you can do this is to formalise it in terms of “rational gambling”, though there are many other ways. Suppose that I believe that there’s a 60% probability of rain tomorrow. If someone offers me a bet: if it rains tomorrow, then I win $5, but if it doesn’t rain then I lose $5. Clearly, from my perspective, this is a pretty good bet. On the other hand, if I think that the probability of rain is only 40%, then it’s a bad bet to take. Thus, we can operationalise the notion of a “subjective probability” in terms of what bets I’m willing to accept. Probability, from this perspective, is a “thing in the head”.

My personal view is much closer to the Bayesian perspective, but I’m also a pragmatist and I use both Bayesian and frequentist methods in my work. In any case, regardless of which version you prefer, Bayesians and frequentists agree on the core mechanics of probability theory, so the tools for working with probabilities in R are the same regardless of which school of thought you prefer!

19.2 Sampling from a set

The sample() function is an extremely useful tool. Suppose I have a set of 10 stimuli that I want to present to people in a random order. For simplicity I’ll label the items using letters:1

stimuli <- letters[1:10]

stimuli## [1] "a" "b" "c" "d" "e" "f" "g" "h" "i" "j"To sample them in a random order, all I need to do is this

shuffled_stimuli <- sample(stimuli)

shuffled_stimuli## [1] "a" "e" "g" "h" "j" "f" "d" "c" "i" "b"Or to do the same thing with piped code…

shuffled_stimuli <- stimuli %>% sample()

shuffled_stimuli## [1] "a" "d" "e" "b" "j" "f" "c" "g" "i" "h"Notice that the output the second time around isn’t the same a the output the first time. The sample() function uses a pseudo-random number generator to order the items differently every time.

for(i in 1:5) {

stimuli %>%

sample() %>%

print()

}## [1] "i" "c" "f" "e" "j" "h" "b" "g" "d" "a"

## [1] "j" "c" "i" "b" "h" "e" "a" "d" "f" "g"

## [1] "b" "j" "c" "d" "a" "f" "g" "i" "e" "h"

## [1] "i" "b" "h" "e" "f" "d" "c" "g" "a" "j"

## [1] "j" "f" "d" "h" "e" "i" "b" "a" "c" "g"As you can see if you feed in a vector of inputs, the default behaviour is to shuffle all the items. However, the sample() function is flexible. For example, “shuffling all the items” is a special case of “sampling without replacement”. Imagine taking all the stimuli (letters), and then placing them into a jar. To shuffle them into a random order all you need to do is shake the jar (randomise) pull them out of the jar (sample) one at a time until the jar is empty (no replacement).

The jars metaphor is nice because we can extend it. Suppose we only want to pull 6 of the 10 stimuli out of the jar:

stimuli %>% sample(size = 6)## [1] "b" "g" "i" "e" "h" "c"Again, there are no repeats (it is impossible to pull the same item from the jar twice), but we stopped before pulling everything out. Here’s a loop showing you how the randomisation gives different answers every time, but it always follows the constraints of stopping at 6 draws and never draws the same item twice:

for(i in 1:5) {

stimuli %>%

sample(size = 6) %>%

print()

}## [1] "g" "j" "h" "f" "i" "b"

## [1] "c" "a" "i" "e" "g" "d"

## [1] "d" "a" "e" "h" "i" "f"

## [1] "j" "f" "g" "h" "c" "d"

## [1] "h" "j" "b" "i" "d" "g"Another way to extend the jars metaphor is to sample with replacement. In this version of the sampling scheme, every time we pull a letter out of the jar we write it down, but then put it back in the jar so that it becomes possible to sample it again.

for(i in 1:5) {

stimuli %>%

sample(size = 15, replace = TRUE) %>%

print()

}## [1] "h" "d" "j" "b" "d" "a" "f" "g" "f" "h" "i" "h" "f" "c" "c"

## [1] "i" "e" "h" "i" "f" "e" "g" "f" "j" "g" "c" "h" "b" "f" "f"

## [1] "b" "e" "c" "j" "a" "a" "i" "a" "a" "b" "i" "j" "b" "c" "i"

## [1] "f" "a" "b" "c" "c" "f" "a" "b" "e" "g" "i" "i" "c" "h" "i"

## [1] "e" "h" "f" "a" "e" "b" "e" "c" "a" "j" "h" "i" "e" "f" "e"Notice that this time you can produce sequences of stimuli that are longer than the original set (because you’re putting them back in the jar). In this case even though I only had 10 items, each output has 15 samples from that set: there are of course repeats!

A final way we can extend the metaphor is to imagine that some of the letters are written on larger pieces of paper than others: so when you reach into the hat you’re more likely to pull out the larger ones.

weights <- 1:10 # weight the later letters more!You can use this when sampling with replacement and without. Here’s what it looks like when sampling without replacement:

for(i in 1:5) {

stimuli %>%

sample(size = 10, replace = FALSE, prob = weights) %>%

print()

}## [1] "h" "j" "e" "i" "f" "g" "b" "d" "c" "a"

## [1] "h" "b" "i" "d" "c" "e" "j" "a" "g" "f"

## [1] "f" "i" "e" "b" "h" "g" "j" "d" "c" "a"

## [1] "d" "g" "h" "f" "i" "j" "e" "b" "c" "a"

## [1] "b" "a" "e" "i" "c" "h" "j" "d" "g" "f"So in this output every line shuffles the 10 items, but there’s a definite bias in how the items are ordered! We’re much more likely to start with the later letters than with the early ones!

We can also do this when sampling with replacement:

for(i in 1:5) {

stimuli %>%

sample(size = 10, replace = TRUE, prob = weights) %>%

print()

}## [1] "h" "i" "j" "e" "g" "i" "i" "i" "f" "h"

## [1] "e" "i" "h" "i" "d" "h" "g" "g" "j" "i"

## [1] "h" "j" "h" "b" "h" "h" "g" "i" "d" "f"

## [1] "f" "h" "d" "d" "h" "a" "g" "j" "h" "i"

## [1] "g" "i" "e" "j" "b" "h" "j" "b" "g" "h"In this output, you can see that we’re very unlikely to sample the letter “a”.

19.3 Binomial distribution

The sample() function gives you a good feel for how you can take a set of entities (stimuli, participants, outcomes, etc) and do probabilistic operations with them. When doing statistics we sometimes like to abstract away from the simple sampling mechanism and start talking in terms of probability distributions. To see how the abstraction works let’s introduce one of the simplest examples, the binomial distribution. Imagine we have a six sided die, in which four sides are coloured "blue" and two sides are coloured "red". Let’s roll the die 20 times and see what we get:

die <- c("blue","blue","blue","blue","red","red")

rolls <- sample(die, size = 20, replace = TRUE)

rolls## [1] "blue" "red" "red" "red" "red" "blue" "red" "red"

## [9] "blue" "blue" "blue" "blue" "red" "blue" "red" "blue"

## [17] "blue" "blue" "blue" "blue"We can count the number of times the result was "blue":

n_blue <- sum(rolls == "blue")

n_blue## [1] 12Of course, there’s nothing stopping us from repeating this exercise several times:

for(i in 1:5){

rolls <- die %>% sample(size = 20, replace = TRUE)

n_blue <- sum(rolls == "blue")

print(n_blue)

}## [1] 15

## [1] 17

## [1] 12

## [1] 10

## [1] 11In fact, let’s go all out on this. Let’s replicate this tiny experiment 100,000 times because that’s easy to do with R:

n_replications <- 100000

n_blue <- numeric(length = n_replications)

for(r in 1:n_replications){

rolls <- die %>% sample(size = 20, replace = TRUE)

n_blue[r] <- sum(rolls == "blue")

}

n_blue <- factor(n_blue, levels = 0:20, ordered = TRUE)

frequencies <- table(n_blue)

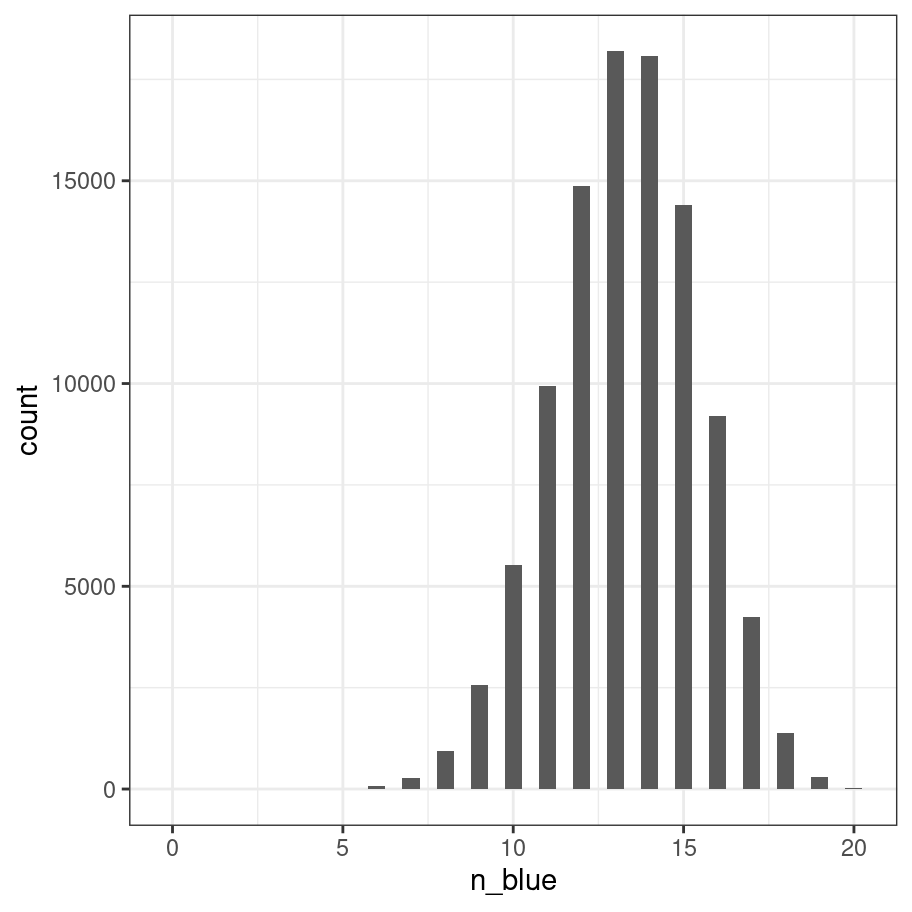

frequencies## n_blue

## 0 1 2 3 4 5 6 7 8 9 10 11

## 0 0 0 1 0 13 74 279 939 2562 5513 9939

## 12 13 14 15 16 17 18 19 20

## 14867 18188 18073 14409 9210 4234 1375 297 27With this particular die the probability of observing a "blue" on any one roll is two-thirds (4 out of 6 sides) and not surprisingly the outcomes of this “roll the die 20 times” experiment tend to be distributed mostly between 12 and 15. I hate looking at tables of numbers, so let’s draw a picture:

as_tibble(frequencies, n = "count") %>%

mutate(n_blue = as.numeric(n_blue)) %>%

ggplot(aes(x=n_blue, y = count)) +

geom_col(width = .5) +

theme_bw()

This picture is essentially a visualisation of the binomial distribution with success probability prob = 2/3, so it’s worth taking a moment to be explicit about what we’ve done. Every one of our experiments produces an outcome (number of blues) that can be described as one random draw from the binomial distribution. So the 100000 replications of the experiment can be viewed as a set of 100000 numbers sampled from the binomial. R contains a function rbinom() that we can use to do this directly:

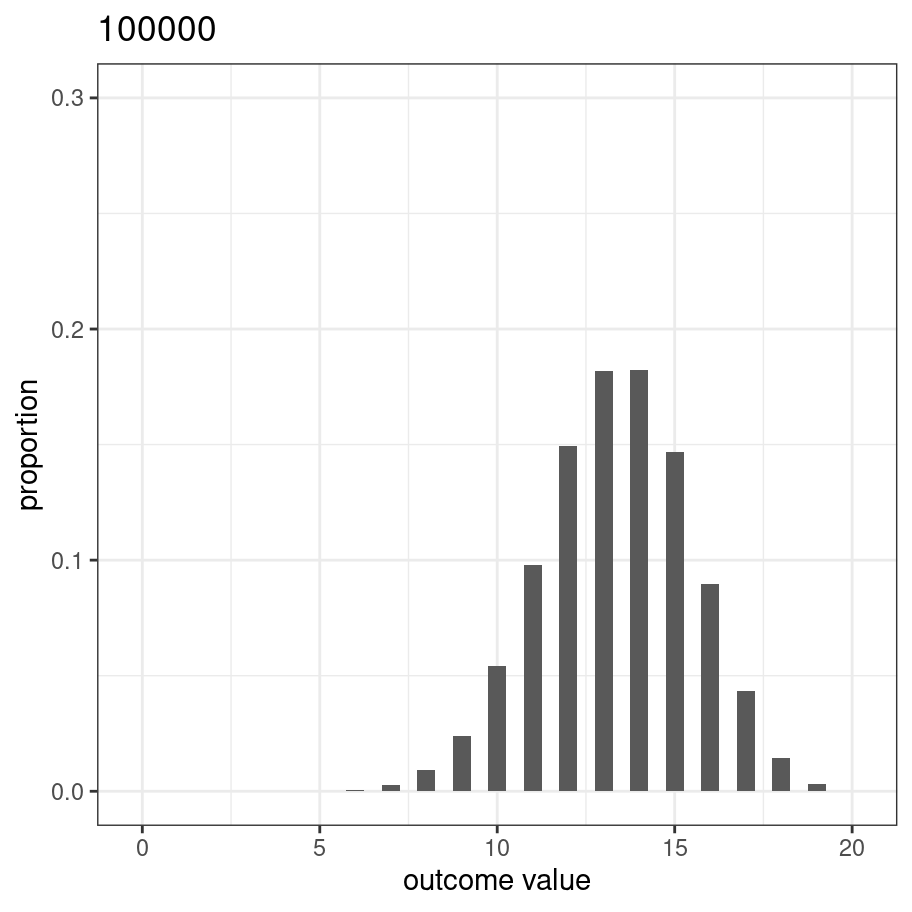

n_blue <- rbinom(n = 100000, size = 20, prob = 2/3)If we process this set of numbers using the same code, we get an almost identical figure. In fact, because I’m going to reuse this code, let’s write a function:

plot_samples <- function(x, size = 20) {

x <- factor(x, levels = 0:size)

frequencies <- table(x)

proportion <- frequencies / sum(frequencies)

pic <- as_tibble(proportion, n = "proportion") %>%

mutate(x = as.numeric(x)) %>%

ggplot(aes(x=x, y = proportion)) +

geom_col(width = .5) +

xlab("outcome value") +

ggtitle(sum(frequencies)) +

ylim(0,.3) +

theme_bw()

return(pic)

}Now call it:

pic <- plot_samples(n_blue)

plot(pic)

Of course, the only reason it looks this nice and smooth is that we replicated the experiment 100000 times. Let’s modify the code so the we start out with a relatively small number of replications and watch it smooth out as it gets larger:

for(rep in seq(from = 50, to = 10000, by = 50)){

n_blue <- rbinom(n = rep, size = 20, prob = 2/3)

pic <- plot_samples(n_blue)

plot(pic)

}If you were typing this at the console, this loop would produce a sequence of plots, but what I’ve done (using some clever features of R Markdown) is wrap it up as an animation. Later on I’ll talk about how to make animations.

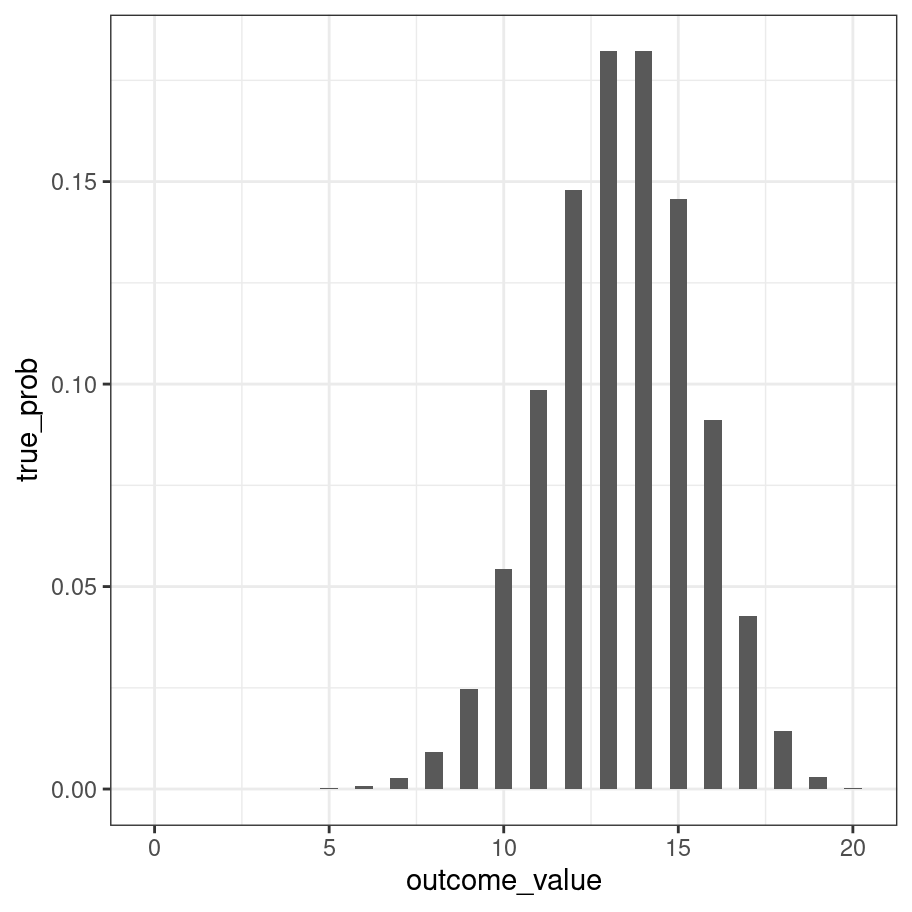

A natural question to ask is about the true probility of obtaining each outcome. One way to do it (approximately) would be to generate very large number of samples and then calculate the proportion of times that we end up with (say) 12 out of 20 rolls being blue. However, there’s an easier way. It turns out there is a formula for this:

\[ P(k | \theta, n) = \frac{n!}{k!(n-k)!} \theta^k (1-\theta)^{n-k} \] where \(n! = n \times (n-1) \times (n-2) \times \ldots \times 2 \times 1\) refers to n factorial. For some people it can be pretty jarring to see things written mathematically when you’re used to thinking verbally or in terms of R code, so let’s translate that to a function:

binomial_prob <- function(k, n, theta) {

first_bit <- factorial(n) / (factorial(k) * factorial(n - k))

second_bit <- (theta ^ k) * (1 - theta)^(n - k)

return(first_bit * second_bit)

}Of course, R already has a function that does this called dbinom():

outcome_value <- 0:20

true_prob <- dbinom(x = outcome_value, size = 20, prob = 2/3)

true_prob## [1] 2.867972e-10 1.147189e-08 2.179659e-07 2.615590e-06

## [5] 2.223252e-05 1.422881e-04 7.114406e-04 2.845762e-03

## [9] 9.248728e-03 2.466327e-02 5.425920e-02 9.865310e-02

## [13] 1.479796e-01 1.821288e-01 1.821288e-01 1.457030e-01

## [17] 9.106440e-02 4.285383e-02 1.428461e-02 3.007287e-03

## [21] 3.007287e-04But just to confirm that our function actually does the same thing as the version R provides:

binomial_prob(k = 13, n = 20, theta = 2/3)## [1] 0.1821288Or, since we like pictures so much…

tibble(outcome_value, true_prob) %>%

ggplot(aes(x = outcome_value, y = true_prob)) +

geom_col(width = .5) +

theme_bw()

Cool.

The animation below shows how the binomial distribution changes as we shift the value of prob:

Here’s another one that shows what happens as we change the size of the experiment.

You can see the central limit theorem in action here! As the size gets larger, the shape of the binomial distribution gets progressively closer to normal. Speaking of which…

19.4 Normal distribution

The normal distribution is the perhaps the most widely used distribution in statistics, so I should talk about it in some detail. It’s also a good moment to talk about how the tools for working with probability distributions in R are structured. As a rule, any distribution that you want to work with in R will be associated with four separate functions. If I want to work with a normal distribution, for instance, there are four different functions - rnorm, dnorm, pnorm and qnorm. If I want to work with a uniform distribution, the functions are named runif, dunif, punif and qunif. For a binomial distribution, they are rbinom, dbinom, pbinom and qbinom. The four versions are:

- The r form is a random number generator: you can use it to sample

nrandom outcomes from the distribution. - The d form computes the probability (or probability density) with which you would observe a particular number

xif it is generated from this distribution. - The p form is the cumulative distribution function. You specify a particular value

q, and it tells you the probability of obtaining an outcome smaller than or equal toq. - The q form calculates the quantiles of the distribution. You specify a probability value

p, and gives you the corresponding percentile. That is, the value of the variable for which there’s a probabilitypof obtaining an outcome lower thanq.

19.4.1 Random sampling

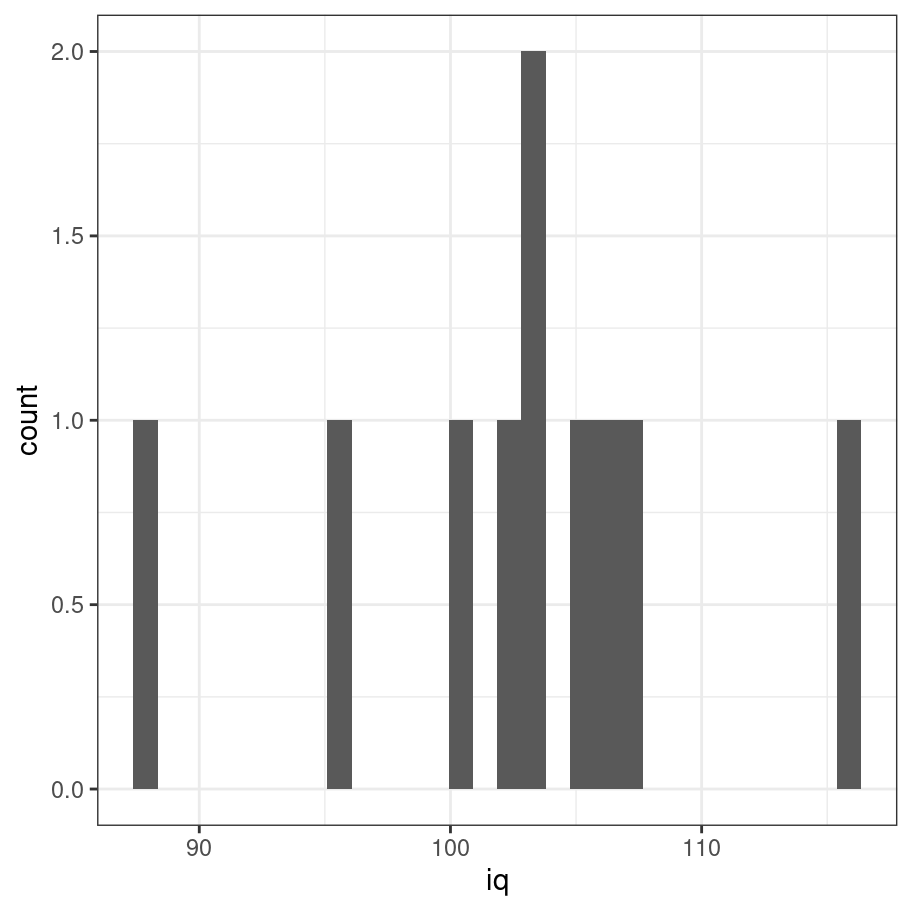

Let’s start with a classic example in the psychological context. By convention, measures of cognitive ability (IQ scores) are designed to have a mean of \(\mu = 100\) and a standard deviation of \(\sigma = 15\). The rnorm() function allows us to generate normally distributed numbers:

iq <- rnorm(n = 10, mean = 100, sd = 15)

iq## [1] 102.96236 107.18382 115.74983 102.33999 100.28263 105.77267

## [7] 87.82983 104.60808 102.62654 96.34584In a real IQ battery such as the WAIS you would probably get results rounded to the nearest whole number, so it probably makes more sense to think of this as the data:

iq <- round(iq)

iq## [1] 103 107 116 102 100 106 88 105 103 96If we draw a quick histogram of this…

tibble(iq) %>% ggplot(aes(x = iq)) + geom_histogram() + theme_bw()

… it’s pretty obvious that you can’t tell much about the distribution. So let’s increase the sample size to 1000:

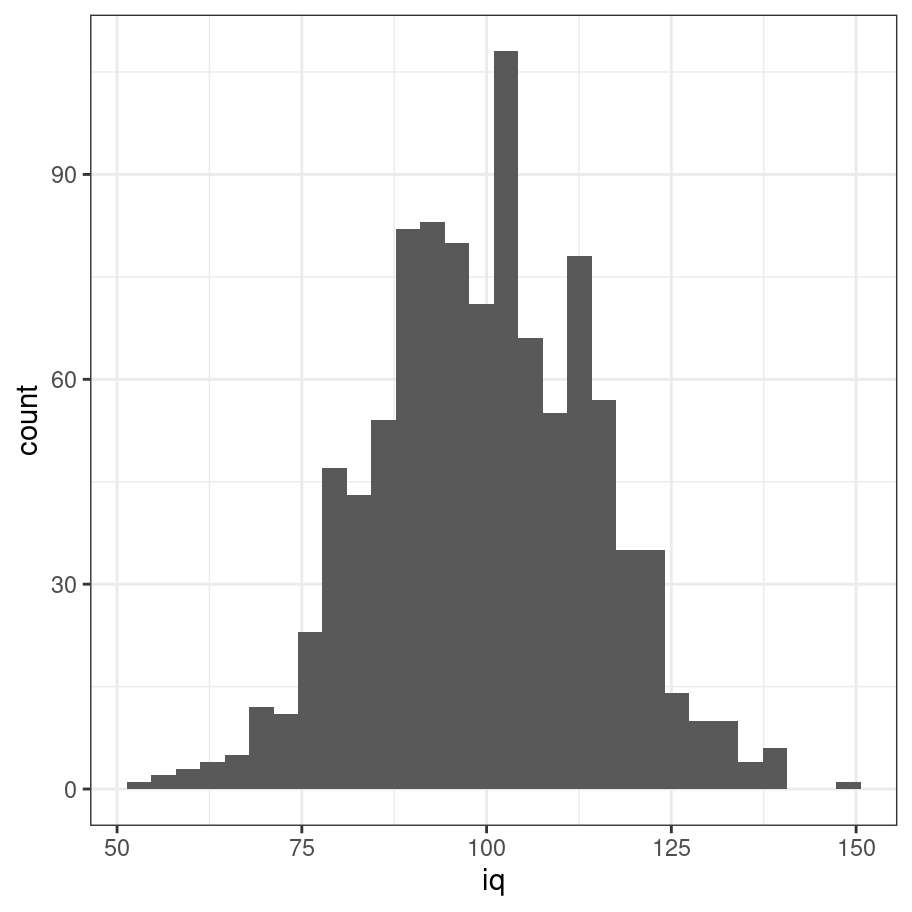

iq <- rnorm(n = 1000, mean = 100, sd = 15) %>% round

tibble(iq) %>% ggplot(aes(x = iq)) + geom_histogram() + theme_bw()

That looks a lot more like the shape we were expecting!

19.4.2 Probability density

Much like the binomial distribution you can imagine that as the sample size gets larger, this shape will smooth out and it will eventually look like a perfect bell curve. As before there is a formula that describes the probability density:

\[

P(x | \mu, \sigma) = \frac{1}{\sqrt{2\pi} \sigma} \exp \left( -\frac{(x-\mu)^2}{2\sigma^2} \right)

\] Just like with the dbinom function, we could implement our own version of it if we really wanted. But why bother? R already does this and does it in a much more efficient way than you or I would. So let’s cut to the chase and use the dnorm function to do the work:

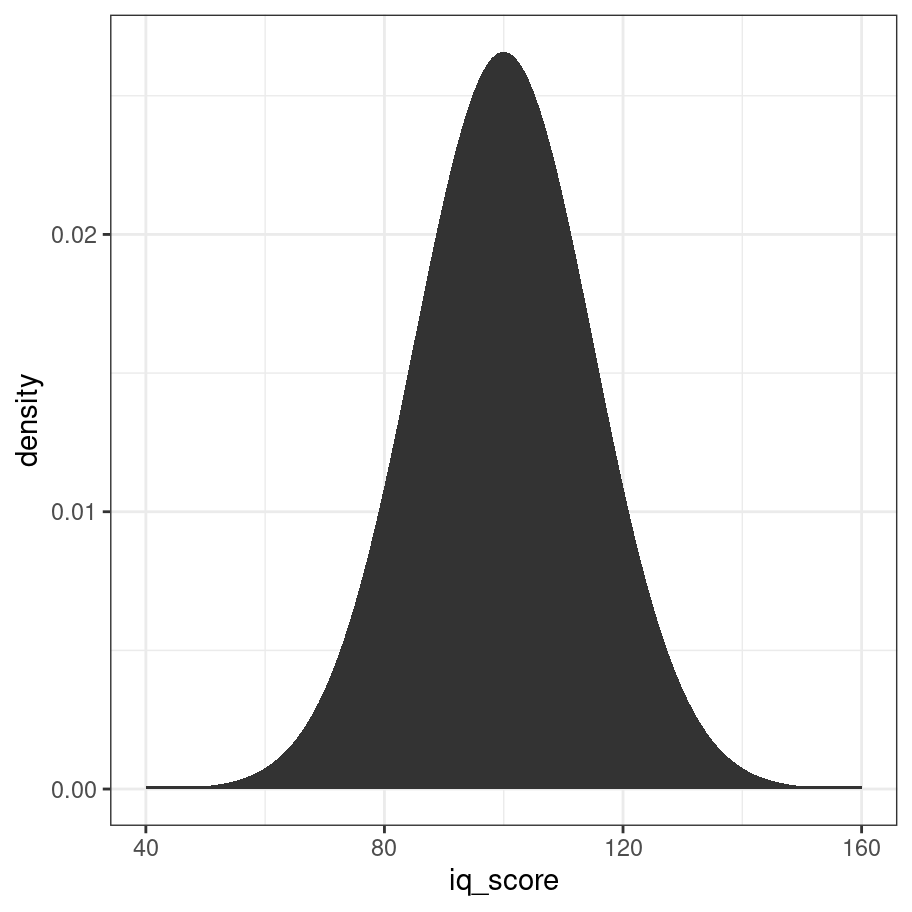

iq_score <- 40:160

density <- iq_score %>% dnorm(mean = 100, sd = 15)

density <- ifelse(density < 1e-4, 1e-4, density)

tibble(iq_score, density) %>%

ggplot(aes(x=iq_score,y=density)) +

geom_area() +

theme_bw()

You’re probably already familiar with how the parameters of the normal distribution work, but even so it’s nice to look at some pretty animations. In the first one, we can see what happens when we shift the mean (i.e. \(\mu\)) of the distribution:

Not too surprising. It’s maybe a little more informative to look at what happens when we change the standard deviation \(\sigma\):

19.4.3 Cumulative probability

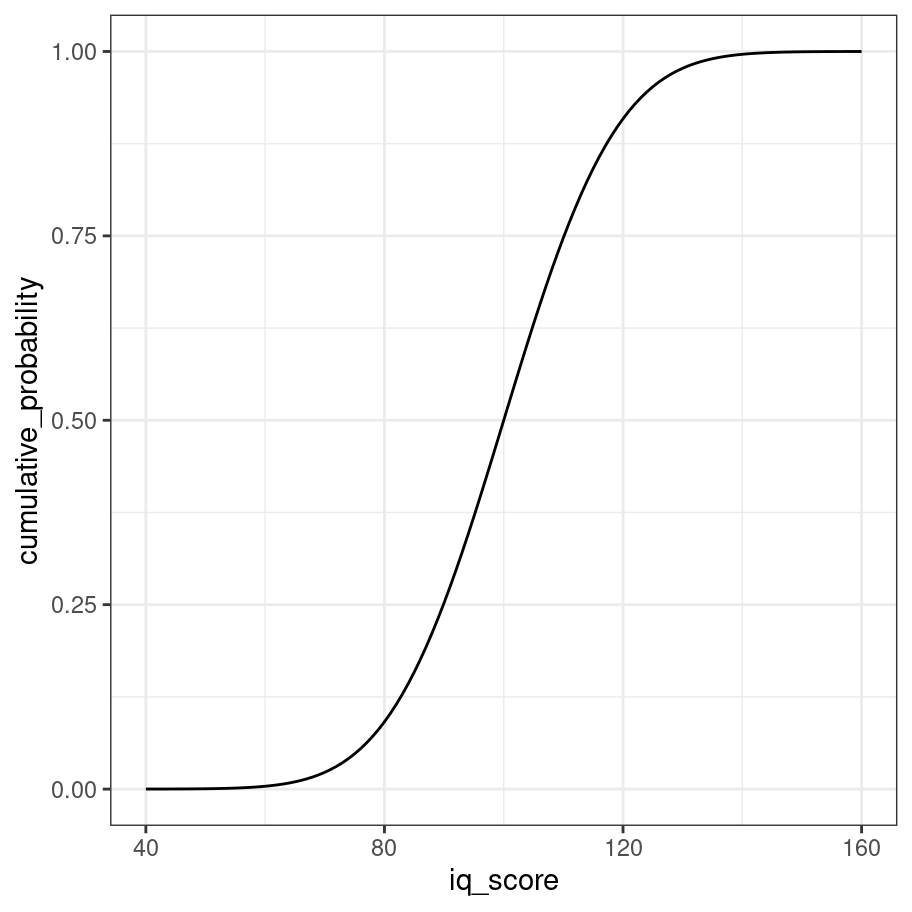

The third tool for working with normal distributions is the pnorm() function, which calculates the cumulative distribution function (CDF) for the normal distribution. The CDF describes the probablity that the value \(x\) sampled from the normal distribution will be smaller than a particular quantile \(q\). That’s a little abstract, but suppose our question was to ask the probability that someone has an IQ of 110 or below. We could compute that like this:

pnorm(q = 110, mean = 100, sd = 15)## [1] 0.7475075In other words, if an IQ test has been properly calibrated you’d expect about 75% of people to score 110 or below. As with our other examples we can draw the complete CDF for the distribution like this:

iq_score <- 40:160

cumulative_probability <- iq_score %>% pnorm(mean = 100, sd = 15)

tibble(iq_score, cumulative_probability) %>%

ggplot(aes(x = iq_score, y = cumulative_probability)) +

geom_line() +

theme_bw()

Just because we can, here’s an animation:

Hopefully you get the idea!

19.4.4 Quantiles

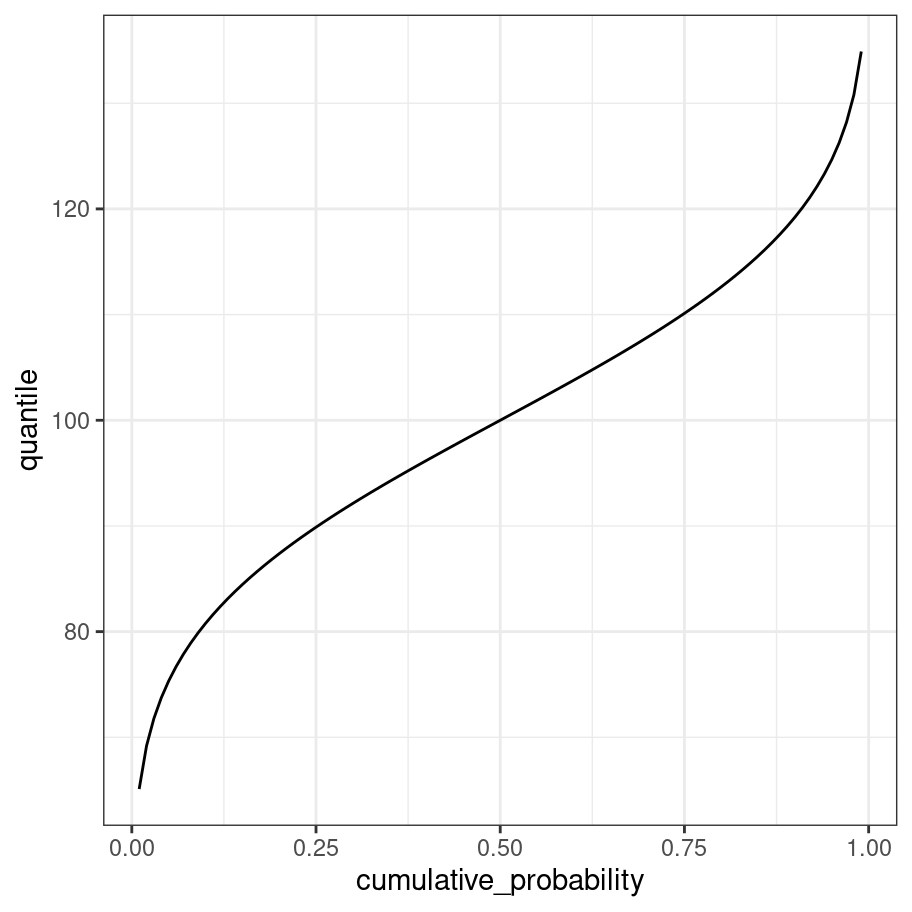

The quantile function is just the inverse of the cumulative distribution (i.e., x and y axes are swapped):

cumulative_probability <- seq(from = .01, to = .99, by = .01)

quantile <- cumulative_probability %>% qnorm(mean = 100, sd = 15)

tibble(quantile, cumulative_probability) %>%

ggplot(aes(x = cumulative_probability, y = quantile)) +

geom_line() +

theme_bw()

The quantile function can be especially useful for working out critical values. So for example, to work out the value of a standard normal that corresponds to the 2.5% lower tail:

qnorm(p = .025, mean = 0, sd = 1)## [1] -1.95996419.5 Other distributions

Not yet written!

19.5.1 Poisson distribution

19.5.2 Log-normal distribution

19.5.3 Response time distribution

19.5.4 Uniform distribution

19.5.5 Beta distribution

19.5.6 t distribution

19.5.7 Chi-square distribution

19.5.8 F distribution

The

lettersvector is a built in vector in R that contains the 26 lower case letters of the English alphabet in canonical order. There is also aLETTERSvector that has the upper case letters.↩︎